By year’s end, travelers will have the option to decline facial recognition scans during airport security checks without concerns about potential delays or travel disruptions. This is just one of the tangible protections being implemented by the Biden administration across various government agencies to prevent misuse of artificial intelligence (AI). These measures could indirectly influence the AI industry through the significant purchasing power of the government.

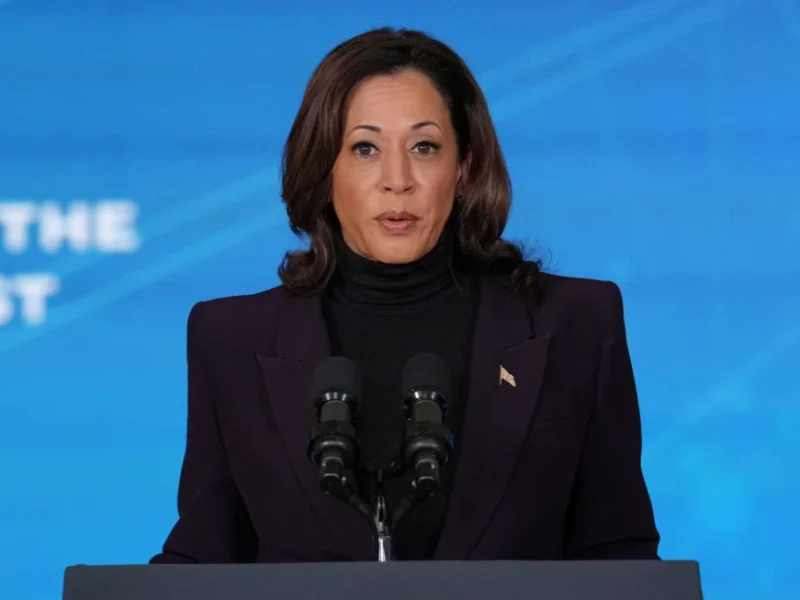

Vice President Kamala Harris unveiled a series of new requirements on Thursday, aiming to ensure that AI is not utilized in discriminatory ways by US agencies. These mandates cover a wide range of scenarios, from TSA screenings to decisions impacting Americans’ healthcare, employment, and housing.

Effective December 1, agencies employing AI tools must ensure they do not infringe upon the rights or safety of citizens. Additionally, each agency is mandated to publicly disclose a comprehensive list of AI systems in use, their purposes, and associated risk assessments.

The Office of Management and Budget (OMB) is also instructing federal agencies to appoint a chief AI officer responsible for overseeing AI usage within their respective organizations.

Harris emphasized the moral obligation of government, civil society, and the private sector to ensure the responsible adoption and advancement of AI, aiming to protect the public from potential harm while maximizing its benefits. These policies are intended to set a global standard.

These announcements come as the federal government increasingly embraces AI technology, utilizing it for various purposes such as monitoring natural disasters and enhancing law enforcement efforts. OMB Director Shalanda Young stressed the importance of establishing safeguards to maximize the potential of AI while minimizing risks.

The Biden administration recognizes the transformative potential of AI but is also mindful of the risks associated with its misuse. Efforts include executive orders and collaboration with AI companies to address concerns such as deepfake technology and safety testing.

While executive actions are significant, there’s a call for congressional legislation to provide a more comprehensive framework for AI regulation. However, progress on this front has been gradual, with expectations for significant results being limited.

Meanwhile, the European Union has taken decisive steps by approving pioneering AI regulations, once again highlighting the gap between the US and EU in regulating disruptive technologies.