A ChatGPT-style AI assistant, created by Microsoft and integrated into its suite of office applications, will be accessible to everyone starting on November 1st, after undergoing trial periods.

Microsoft 365 Copilot is capable of summarizing meetings conducted in Teams for individuals who opt not to participate. Furthermore, it can swiftly compose emails, generate Word documents, create spreadsheet graphs, and craft PowerPoint presentations.

Microsoft envisions this tool as a means to reduce mundane tasks, although there are concerns that such technology may lead to job displacement. Some also worry that businesses might become overly dependent on AI-powered assistance, which could pose risks to their operations.

In its current state, Microsoft 365 Copilot might face issues with new AI regulations, particularly for not adequately disclosing when content has not been generated by humans. Both the European AI Act and China’s AI regulations stipulate that users must be aware when they are interacting with artificial intelligence rather than human agents.

Colette Stallbaumer, the head of Microsoft 365, emphasized that it is the responsibility of the individual using Copilot to provide such clarification. She stated, “It is a tool, and people have a responsibility to use it responsibly.” However, the European Union asserts that it is the responsibility of the companies developing AI tools to ensure their responsible usage.

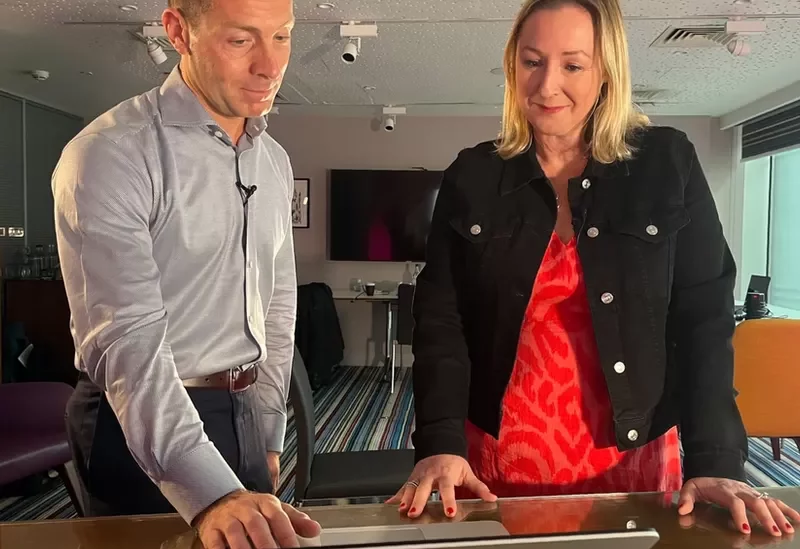

Colette Stallbaumer asserts that it is the individual’s duty to clearly communicate when they are utilizing AI.

You were granted an exclusive opportunity to test Copilot before its broader release. This technology relies on the same underlying technology as ChatGPT, developed by OpenAI, a company in which Microsoft has invested a significant amount of money.

During your demonstration, Copilot was accessed on Derek Snyder’s laptop, a Microsoft staff member, as it is integrated into an individual’s account with access to their own data or a company’s data. Microsoft assures that the data is securely managed and won’t be utilized to train the technology. According to Colette Stallbaumer, “You only have access to data that you would otherwise be allowed to see,” and the system adheres to data policies.

Your initial impression of Copilot suggests it will be a valuable tool and a highly competent collaborator, especially for office work, which could be particularly advantageous for cost-saving initiatives in businesses.

You observed Copilot efficiently summarizing a lengthy email thread related to a fictional product launch in just a few seconds. It then suggested a concise response, which you could easily extend and make more casual using a drop-down menu. The Chatbot generated a warm and appreciative reply without any of you having read the content. You had the option to edit the email before sending it or send the AI-generated content without any indication that it involved Copilot.

Furthermore, you witnessed the tool rapidly create a multi-slide PowerPoint presentation in around 43 seconds, based on the content of a Word document. It could incorporate images from the document or use its own royalty-free collection. The generated presentation was simple but effective, and Copilot even provided a suggested narrative to accompany it.

Copilot successfully generated a persuasive PowerPoint presentation.

While Copilot excelled at various tasks, it had limitations. It didn’t fully grasp your request to make a presentation more “colorful” and referred you back to manual PowerPoint tools.

In terms of Microsoft Teams meetings, Copilot showcased its ability to identify discussion themes and offer quick summaries of various threads. It could also summarize individual contributions and present pros and cons in chart format for disagreements, all accomplished within a few seconds. However, the tool was designed not to answer questions related to individual performance in meetings, such as identifying the best or worst speaker.

You asked Mr. Snyder if people might stop attending meetings once they realized Copilot could save them time and effort, to which he humorously suggested that many meetings might become webinars.

An important point to note is that Copilot currently cannot differentiate between individuals who are sharing one device while participating in Teams meetings unless they verbally indicate their presence.

Copilot comes with a monthly cost of $30 and is internet-connected, requiring an online connection to function. Critics argue that such technology could significantly disrupt administrative jobs. Carissa Veliz, an associate professor at Oxford University’s Institute for Ethics in AI, expressed concerns about people becoming overly reliant on these tools and the potential consequences when technology fails, gets hacked, or faces glitches or policy changes.