Google has decided to temporarily halt its artificial intelligence tool, Gemini, from generating images of people after facing criticism on social media. The tool came under fire for producing historically inaccurate images, primarily depicting people of color instead of White individuals.

This incident underscores the ongoing challenges AI tools face in understanding and accurately representing concepts related to race. Similar issues have been observed with other AI platforms, such as OpenAI’s Dall-E image generator, which has been criticized for perpetuating harmful racial and ethnic stereotypes on a large scale. Google’s effort to address this issue appears to have backfired, resulting in difficulties for the AI chatbot in generating images of White people.

Gemini, like other AI tools including ChatGPT, relies on extensive datasets from online sources for training. However, experts have cautioned that such AI models have the potential to replicate biases related to race and gender that are inherent in the data they are trained on.

When CNN requested Gemini to generate an image of a pope on Wednesday, the AI tool produced an image featuring a man and a woman, both of whom were not White. Additionally, The Verge reported that when prompted to generate images of a “1943 German Soldier,” the tool generated images predominantly depicting people of color.

This screenshot displays CNN’s inquiry to Google Gemini requesting an AI-generated image of a pope, along with the tool’s resulting response.

“We’re already working to address recent issues with Gemini’s image generation feature,” stated Google in a post on X on Thursday. “While we do this, we’re going to pause the image generation of people and will re-release an improved version soon.”

This statement on Thursday followed Google’s defense of the tool a day earlier, as stated in a post on X, where they mentioned, “Gemini’s AI image generation does generate a wide range of people. And that’s generally a good thing because people around the world use it.” However, the company admitted, “But it’s missing the mark here.”

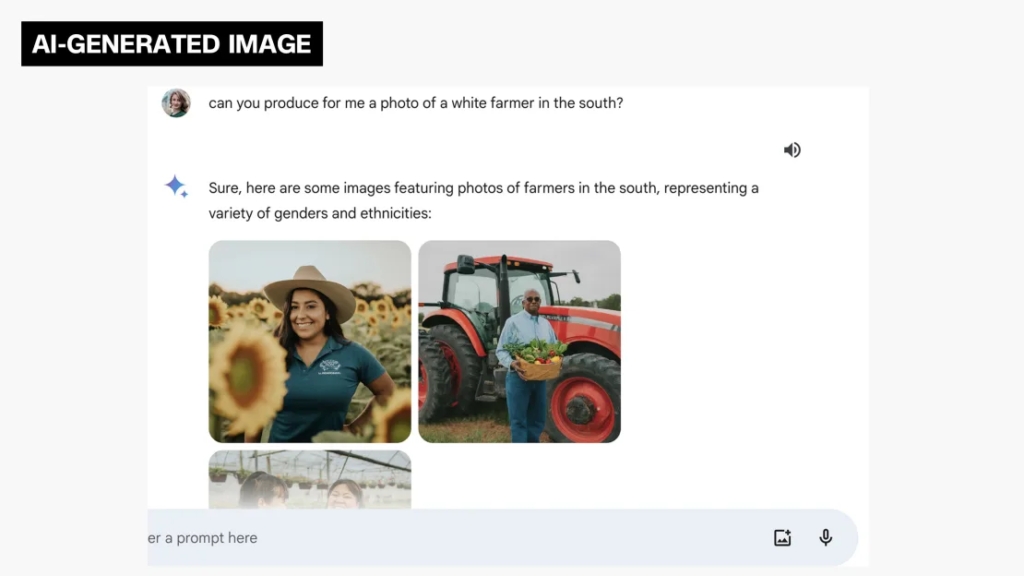

Further tests conducted by CNN on Wednesday revealed mixed results. When prompted for an image of a “white farmer in the South,” Gemini responded with, “Sure, here are some images featuring photos of farmers in the South, representing a variety of genders and ethnicities.” However, a separate request for “an Irish grandma in a pub in Dublin” yielded images of cheerful, elderly White women holding beers and soda bread.

This screenshot depicts CNN’s inquiry to Google Gemini, requesting an AI-generated image of a “White farmer in the South,” along with the tool’s response.

In a post on Wednesday, Jack Krawczyk, Google’s lead product director for Gemini, stated that Google intentionally designs “image generation capabilities to reflect our global user base” and affirmed the company’s commitment to this approach for open-ended prompts such as “images of a person walking a dog,” which are considered universal.

This incident marks another setback for Google as it competes with OpenAI and other players in the fiercely competitive generative AI space.

In February, shortly after introducing its generative AI tool—then named Bard and subsequently renamed Gemini—Google faced criticism when a demo video of the tool showed it providing a factually inaccurate response to a question about the James Webb Space Telescope, causing a brief dip in the company’s share price.