Google has introduced an AI system named DolphinGemma, designed to interpret dolphin vocalisations and eventually enable communication between species.

Dolphins’ complex sounds—ranging from clicks to whistles—have long intrigued researchers aiming to uncover the meaning behind them. To support this mission, Google teamed up with engineers from the Georgia Institute of Technology and used data from the Wild Dolphin Project (WDP), an organisation that has been observing dolphin behavior since 1985.

Launched near National Dolphin Day, DolphinGemma is a foundational AI model built to analyse dolphin vocal patterns. It’s trained to understand their structure and can even produce realistic dolphin-like sounds.

The WDP’s extensive fieldwork focuses on identifying how dolphins use specific sounds in context, including:

- Signature whistles: Individualised sounds used like names, often helping mothers locate their calves.

- Burst-pulse squawks: Linked to aggressive or confrontational behavior.

- Click buzzes: Frequently heard during mating rituals or when dolphins pursue sharks.

The project’s long-term objective is to reveal the structure and possible meaning within these vocal sequences, potentially uncovering a type of dolphin language. Their deep analysis has provided the labelled data necessary to train advanced AI tools like DolphinGemma.

DolphinGemma: AI tuned to understand dolphin vocalisations

Deciphering the vast and intricate soundscape of dolphin communication is a major challenge—one well-suited for artificial intelligence.

Google’s DolphinGemma meets this challenge using advanced audio processing tools. It applies the SoundStream tokeniser to convert dolphin sounds into a form that can be interpreted by a model built to understand complex audio sequences.

Inspired by Google’s lightweight Gemma models—which share architecture with the powerful Gemini family—DolphinGemma operates as an audio-in, audio-out system. It’s trained on natural dolphin recordings from the Wild Dolphin Project (WDP), learning to spot repeated acoustic patterns and anticipate the next sound in a sequence, much like predictive text in language models.

With about 400 million parameters, DolphinGemma is designed for efficiency, making it compatible with mobile devices like the Google Pixel phones used by WDP researchers in the field.

Now being introduced into WDP’s research workflow, the model is expected to greatly enhance progress. By automatically detecting consistent sound patterns that once required time-consuming manual analysis, it offers new opportunities to uncover deeper meaning and structure in dolphin vocalisations.

The CHAT system and two-way interaction

While DolphinGemma is designed to interpret natural dolphin vocalisations, a complementary effort is focused on building two-way communication.

The CHAT system (Cetacean Hearing Augmentation Telemetry), created by the Wild Dolphin Project in collaboration with Georgia Tech, doesn’t aim to translate dolphin language directly. Instead, it introduces a simplified, shared code.

CHAT uses artificial whistles—distinct from the dolphins’ natural sounds—linked to specific objects dolphins find engaging, such as scarves or seaweed. Researchers pair these sounds with the objects, encouraging dolphins to repeat the whistles when they want those items.

As DolphinGemma helps decode more of the dolphins’ natural signals, some of these could eventually be added to the CHAT system to enhance this interactive framework.

Google Pixel powers marine research

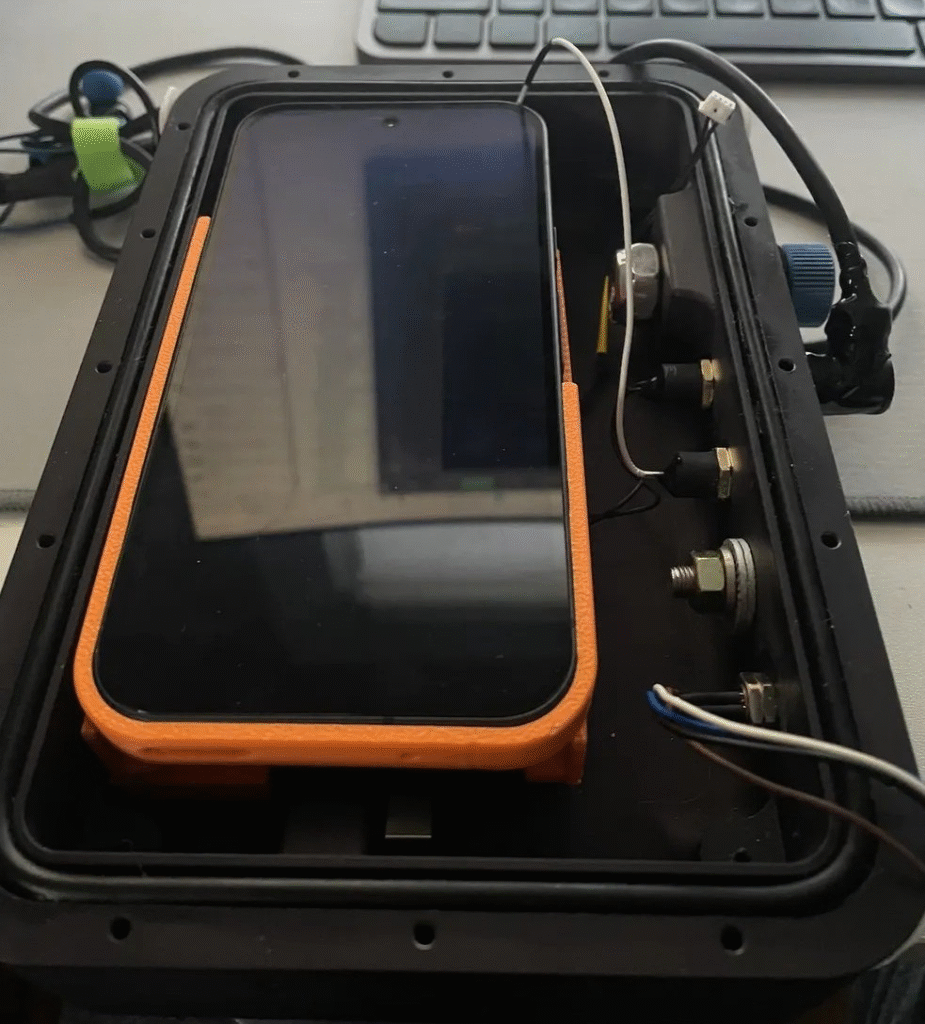

Advanced mobile technology plays a vital role in both decoding natural dolphin sounds and supporting the CHAT system. Google Pixel smartphones handle real-time processing of high-quality audio data—even in demanding underwater conditions.

In the CHAT setup, Google Pixel devices are used to:

- Detect when a dolphin mimics a whistle despite background noise.

- Recognise the specific whistle being used.

- Notify the researcher (through bone-conduction headphones) of the dolphin’s request.

This quick feedback loop allows researchers to deliver the correct item, reinforcing the association. The original system ran on a Pixel 6, but the upcoming version—set for summer 2025—will upgrade to a Pixel 9, featuring integrated audio input/output and capable of running deep learning and template-matching models simultaneously for improved efficiency.

Using smartphones like the Google Pixel significantly cuts down the need for large, costly custom equipment. This shift enhances portability, reduces energy consumption, and simplifies maintenance. By incorporating DolphinGemma’s predictive capabilities into the CHAT system, researchers can detect mimicked sounds more quickly, making dolphin interactions smoother and more responsive.

Acknowledging that innovation thrives through collaboration, Google plans to release DolphinGemma as an open-source model later this summer. Although originally trained on Atlantic spotted dolphin data, the model’s flexible design can be adapted for use with other cetacean species by fine-tuning it to match their unique vocal patterns.

The goal is to empower researchers around the world with accessible, high-performance tools to analyse their own marine audio datasets—advancing global efforts to decode dolphin communication. This marks a shift from passive observation to active interpretation, bringing us a step closer to meaningful interspecies dialogue.