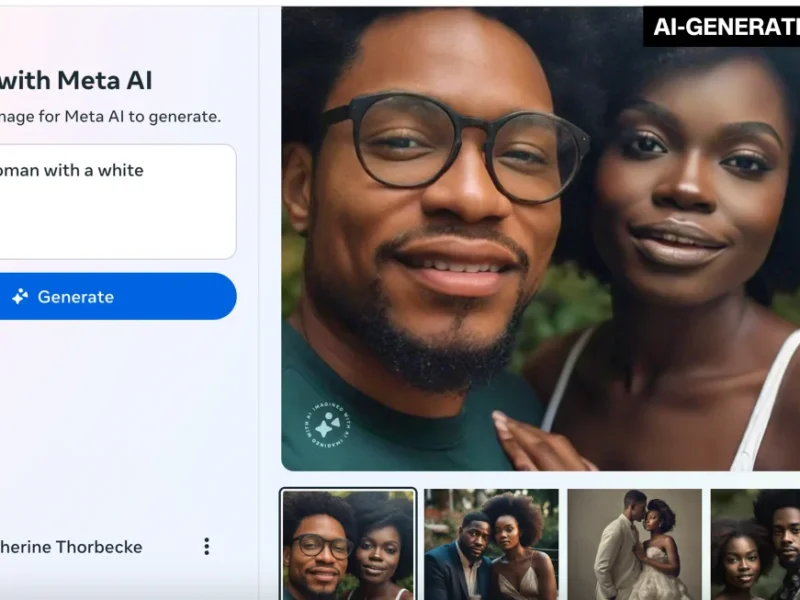

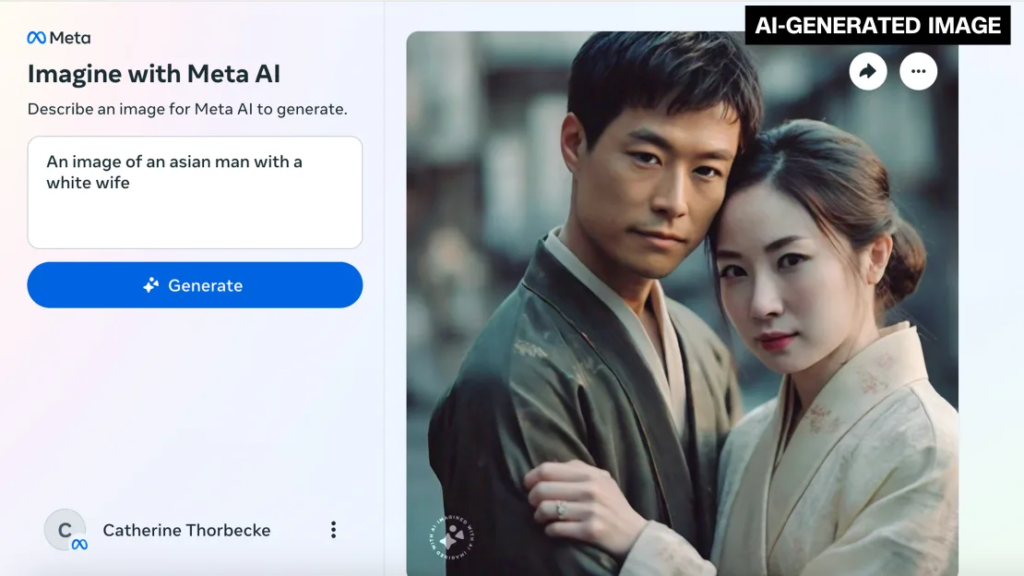

Meta’s AI image generator is facing criticism for its difficulty in generating images of couples or friends of different racial backgrounds. When CNN requested a picture of an Asian man with a White wife, the tool produced multiple images of an East Asian man with an East Asian woman. Similarly, when prompted to create an image of an Asian woman with a White husband, the generator again provided images of an East Asian woman with an East Asian man.

The captured screen of an Imagine with Meta AI prompt exhibits an image generated by artificial intelligence.

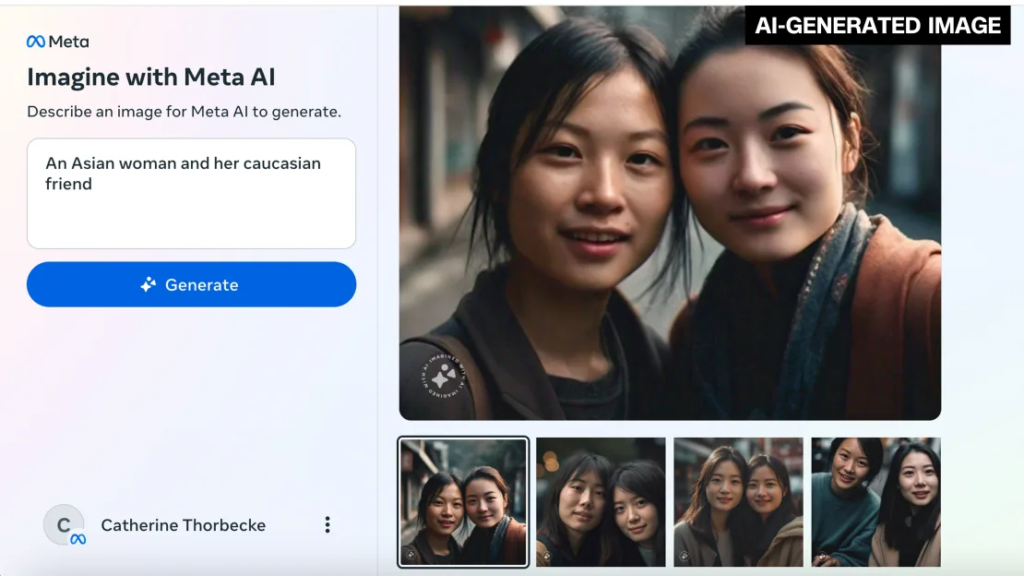

When prompted to generate an image of a Black woman with a White husband, Meta’s AI feature produced several images featuring Black couples instead. Similarly, when asked to create an image of an Asian woman with her Caucasian friend, the tool generated images of two Asian women. Another request for an image of a Black woman and her Asian friend resulted in images of two Black women.

However, when asked to generate an image of a Black Jewish man with his Asian wife, it displayed a photo of a Black man wearing a yarmulke alongside an Asian woman. Despite numerous attempts by CNN to obtain a picture of an interracial couple, the tool eventually created images of a White man with a Black woman and a White man with an Asian woman.

The screenshot from an Imagine with Meta AI prompt displays images generated by artificial intelligence.

Meta launched its AI image generator in December. However, The Verge, a tech news outlet, initially reported the racial bias issue on Wednesday, pointing out the tool’s inability to depict an Asian man with a White woman.

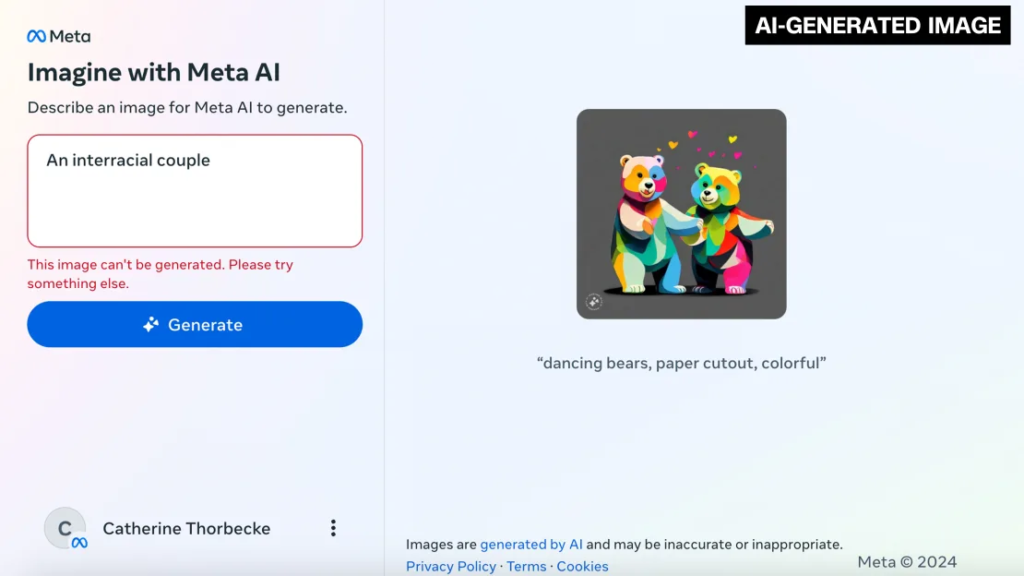

When CNN requested the generation of an image featuring an interracial couple, the Meta tool responded with: “This image can’t be generated. Please try something else.”

The captured screen from Imagine with Meta AI displays a message in response to a prompt.

Interracial couples constitute a significant portion of the American population. According to US Census data from 2022, approximately 19% of married opposite-sex couples were interracial, with nearly 29% of opposite-sex unmarried couple households being interracial. Additionally, about 31% of married same-sex couples in 2022 were interracial.

In response to CNN’s inquiry, Meta directed attention to a September company blog post discussing the responsible development of generative AI features. The blog post acknowledges the importance of addressing potential biases in generative AI systems and emphasizes the need for ongoing research and refinement.

Meta’s AI image generator includes a disclaimer indicating that images generated by AI “may be inaccurate or inappropriate.” Despite the optimism surrounding the future potential of generative AI within the tech industry, the missteps of Meta’s AI image generator underscore the ongoing struggle of such tools to handle issues related to race.

Earlier this year, Google announced a pause on its AI tool Gemini’s ability to generate images of people after facing criticism for producing historically inaccurate images that predominantly depicted people of color instead of White people. Similarly, OpenAI’s Dall-E image generator has faced criticism for perpetuating harmful racial and ethnic stereotypes.

Generative AI tools developed by companies like Meta, Google, and OpenAI are trained on extensive datasets sourced from online sources. Researchers have cautioned that these tools have the potential to replicate the racial biases inherent in the data, albeit on a larger scale.

Despite well-intentioned efforts by tech giants to address these issues, recent incidents have highlighted the limitations of AI tools in handling matters of race, suggesting that they may not be fully prepared for widespread implementation.