As an update to Google’s chatbot Bard, Gemini is being made available, though not in the UK or the EU yet.

Google has released a new artificial intelligence model that it says performs better than ChatGPT in the majority of tests and shows “advanced reasoning” in a variety of formats, including the capacity to examine and grade physics homework submitted by students.

The Gemini model is the first to be unveiled since the worldwide AI safety summit last month, during which tech companies committed to working with governments to test cutting-edge systems both before and after they are released. Google announced that it was in talks to test the most potent version of Gemini, scheduled for release in the next year, with the UK’s recently established AI Safety Institute.

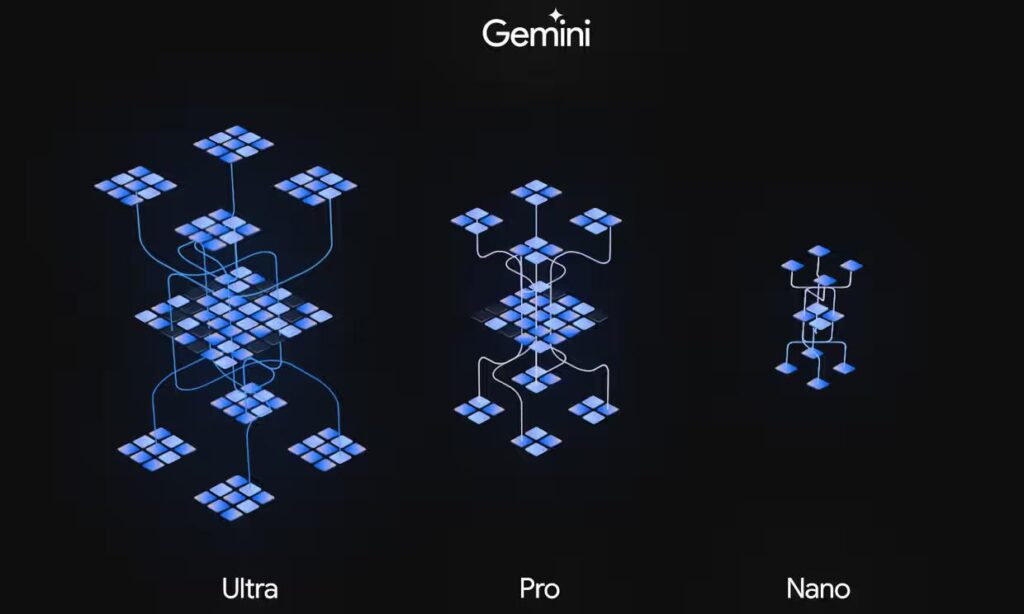

The model is “multimodal,” meaning it can understand text, voice, graphics, video, and computer code all at once. It is available in three different versions.

As an update to Google’s chatbot Bard, Gemini is being distributed first in more than 170 countries, including the US, on Wednesday. Gemini will be integrated with Google products, including its search engine.

Nevertheless, while Google obtains regulatory approval, the Bard update will not be made available in the UK or around Europe.

The head of DeepMind, the Google division based in London that created Gemini, Demis Hassabis, stated: “It’s been the most complicated project we’ve ever worked on, I would say the biggest undertaking.” It has required a great deal of work.

On Wednesday, Gemini Pro and Nano, two scaled-down models, will be available. The Nano version will be available on Android-powered smartphones, while the Pro model may be accessed through Google’s Bard chatbot.

The most potent version, Ultra, is undergoing external testing and won’t be made available to the public until early 2024. At that time, it will also be included into Bard Advanced, a version of Bard.

According to Hassabis, the Ultra model will go through external “red team” testing, in which professionals evaluate a product’s security and safety. Google will then share the results with the US government in accordance with an executive order that Joe Biden signed in October.

When asked if Gemini had been tested in accordance with the plans announced at the Bletchley Park AI safety conference, Hassabis replied that Google was in talks with the UK government regarding the AI Safety Institute testing the model.

He remarked, “We’re talking with them about how we want them to do that.” The testing, which are just for the most sophisticated, or “frontier,” versions, will not include the Pro or Nano models.

The general manager of Google’s Bard program, Sissie Hsiao, announced that the Pro-powered version of Bard will not be available in the UK just yet. Additionally, the European Economic Area—which comprises the EU and Switzerland—is not receiving it. “We are working with local regulators,” the woman stated. The regulatory concerns behind the delays in the UK and EU were not disclosed by Google.

According to Google, Ultra fared better on 30 out of 32 benchmark tests, including reasoning and picture interpretation, than “state-of-the-art” AI models, including ChatGPT’s most potent model, GPT-4. On six out of eight tests, the Pro model fared better than GPT-3.5, the technology that powers ChatGPT’s free edition.

Google did note that “hallucinations,” or incorrect responses, remained an issue with the model. As far as I can tell, the research problem remains unsolved, according to Eli Collins, the head of product at Google DeepMind.

The Pro and Nano versions that are being made available to the public this month can only react in text or code format at the moment, despite the fact that all of the Gemini versions are multimodal in terms of the stimuli they can understand.

In promotional movies, Google demonstrated the capabilities of Gemini by having the Ultra model read handwritten physics homework assignments from students and provide comprehensive solutions, including equation visualization. In additional videos, Gemini’s Pro version was demonstrated accurately identifying a picture of a duck and determining which movie a person was acting out on a smartphone video—in this example, a clumsy rendition of The Matrix’s well-known “bullet time” moment.

Collins claimed that Gemini’s most potent mode has demonstrated “advanced reasoning” and may demonstrate “novel capabilities,” or the capacity to carry out tasks that previous AI models had not demonstrated.

AI concerns span from the production of mass-produced misinformation to the development of “superintelligent” systems that are immune to human control. AI is the term for computer systems that can do jobs that typically need human intelligence. The emergence of artificial general intelligence, or AI that can carry out a variety of jobs at a level of intelligence comparable to or higher than that of humans, worries some researchers.

Hassabis responded, “I think these multimodal foundational models are going to be key component of AGI, whatever that final system turns out to be,” when asked if Gemini constituted a significant step towards AGI. However, there are still certain gaps, which we’re currently investigating and coming up with solutions for.

With an MMLU score of 90%, Google claimed that Ultra was the first artificial intelligence model to beat human experts in 57 different topics, including arithmetic, physics, law, medicine, and ethics. Now, AlphaCode2, a new code-writing tool from Google, is powered by Ultra and is expected to outperform 85% of human programmers at the competition level.

According to Hassabis, the open web was one of the many sources of data utilized to train Gemini. The publishing and creative sectors have objected to AI businesses exploiting online copyrighted information to construct models.