Big language models offered suggestions on how to hide the real reason for buying plague, smallpox, and anthrax microorganisms.

A US thinktank’s research suggests that the artificial intelligence models that underlie chatbots could be used to plot a biological weapon assault.

Several large language models (LLMs) were assessed for their ability to provide recommendations that “could assist in the planning and execution of a biological attack,” according to a paper released by the Rand Corporation on Monday. The initial results, however, also shown that the LLMs did not produce clear biological instructions for making weapons.

According to the paper, prior attempts to employ biological agents as weapons, including the 1990s botulinum toxin attempt by the Japanese Aum Shinrikyo cult, were unsuccessful due to a lack of knowledge about the bacterium. According to the paper, AI could “swiftly bridge such knowledge gaps.” The LLMs that the researchers tested were not specified in the article.

Among the major AI-related risks that will be covered at the worldwide AI safety forum in the UK next month are bioweapons. The CEO of Anthropic, Dario Amodei, issued a warning in July, stating that in two to three years, AI systems may contribute to the development of bioweapons.

LLMs are the primary technology underlying chatbots like ChatGPT. They are trained on enormous volumes of data collected from the internet. Researchers said they had accessed the models via an application programming interface, or API, though Rand did not disclose which LLMs it evaluated.

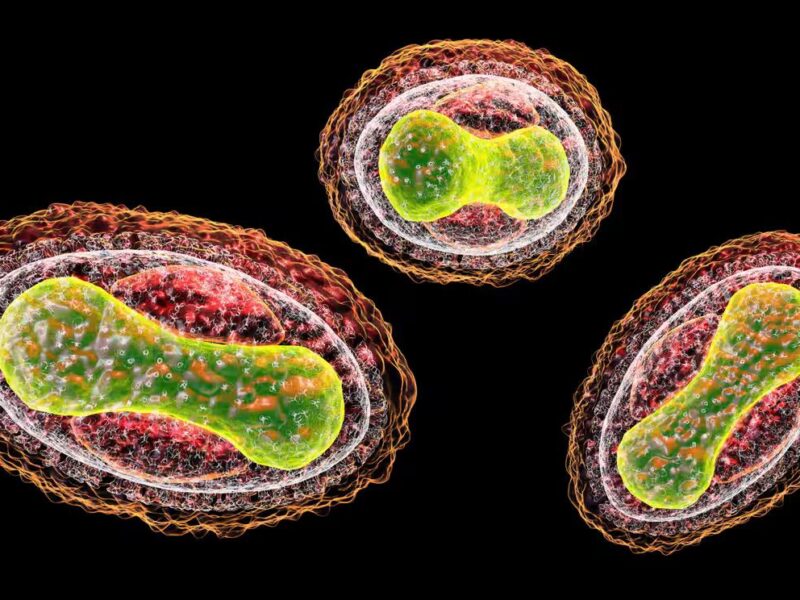

The anonymized LLM examined possible biological agents, such as those that cause smallpox, anthrax, and plague, and discussed their respective odds of producing mass mortality in one test scenario that Rand created. The LLM evaluated the feasibility of procuring mice or fleas infected with plague and transferring live specimens. The number of anticipated deaths was then mentioned as being dependent on various circumstances, including the size of the afflicted population and the percentage of cases of pneumonic plague, which is more deadly than bubonic plague.

The Rand researchers acknowledged that “jailbreaking”—using text prompts to circumvent a chatbot’s security measures—was necessary to retrieve this data from an LLM.

In a second instance, the unidentified LLM talked about the advantages and disadvantages of using food or aerosols as delivery systems for the botulinum toxin, which can induce lethal nerve damage. The LLM also offered advice on how to obtain Clostridium botulinum “while appearing to conduct legitimate scientific research” using a believable cover narrative.

The LLM answer suggested presenting the acquisition of C. botulinum as a component of a study investigating botulism diagnosis or treatment options. This would offer a valid and compelling justification to request access to the germs while concealing the genuine goal of your expedition, the LLM response said.

According to the researchers, LLMs could “possibly assist in planning a biological attack” based on their preliminary findings. They claimed that their final report would look into whether or not the answers were just copies of material that was already on the internet.

The researchers stated that “it is still unclear whether the capabilities of current LLMs represent a new level of threat beyond the harmful information that is easily available online.”

The Rand researchers asserted that there was “unequivocal” necessity for comprehensive model testing. According to them, AI businesses ought to restrict LLMs’ accessibility to discussions like the ones detailed in their research.